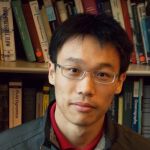

Speaker: Yian Ma

Affiliation: UC San Diego

ABSTRACT:

I will introduce some recent progress towards understanding the scalability of Markov chain Monte Carlo (MCMC) methods and their comparative advantage with respect to variational inference. I will discuss an optimization perspective on the infinite dimensional probability space, where MCMC leverages stochastic sample paths while variational inference projects the probabilities onto a finite dimensional parameter space. Three ingredients will be the focus of this discussion: non-convexity, acceleration, and stochasticity. This line of work is motivated by epidemic prediction, where we need uncertainty quantification for credible predictions and informed decision making with complex models and evolving data.

BIO:

Yian Ma is an assistant professor at the Halıcıoğlu Data Science Institute and an affiliated faculty member at the Computer Science and Engineering Department of University of California San Diego. Prior to UCSD, he spent a year as a visiting faculty at Google Research. Before that, he was a post-doctoral fellow at EECS, UC Berkeley. Yian completed his Ph.D. at University of Washington and obtained my bachelor’s degree at Shanghai Jiao Tong University.

His current research primarily revolves around scalable inference methods for credible machine learning. This involves designing Bayesian inference methods to quantify uncertainty in the predictions of complex models; understanding computational and statistical guarantees of inference algorithms; and leveraging these scalable algorithms to learn from time series data and perform sequential decision making tasks.

Hosted by Professor Quanquan Gu

Location | Hybrid: Via Zoom Webinar & In Person @ 3400 Boelter Hall

Date/Time:

Date(s) - Nov 18, 2021

4:15 pm - 5:45 pm

Location:

3400 Boelter Hall

420 Westwood Plaza Los Angeles California 90095