by Anton Shkel and Abraham Grair

Why do you need a file system in your operating system?

1) Persistent Storage - We need a system to maintaining our data after power is lost, even in cases of failures and crashes.

2) Organization - We need a system that makes it easy to find and change information (if the user knows where it is). It should also be flexible.

3) Storage Space - We need much more space than RAM allows us to have.

4) Security - Needs to be secure from threats.

5) Reliability - Needs to be reliable in case of failures

6) Performance - Needs to be fast.

All of

the previous features are required in a successful file system.

However, performance is a crucial and important feature of a file

system, and will be focused on in this lecture.

Software engineers sometimes focus too much on performance, which might

lead to a problem called premature optimization in which the system is

fast, but buggy. For this reason it is important to keep everything in

perspective.

Source: R1Soft

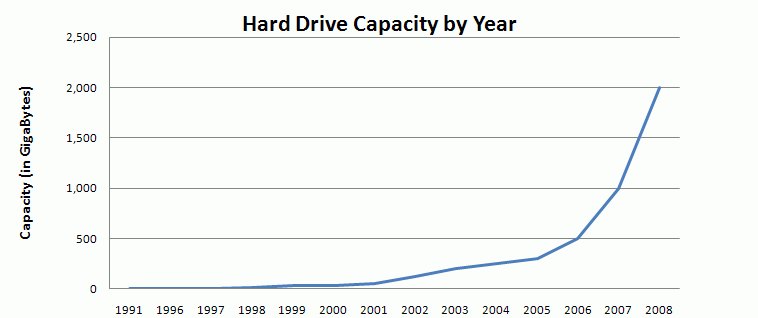

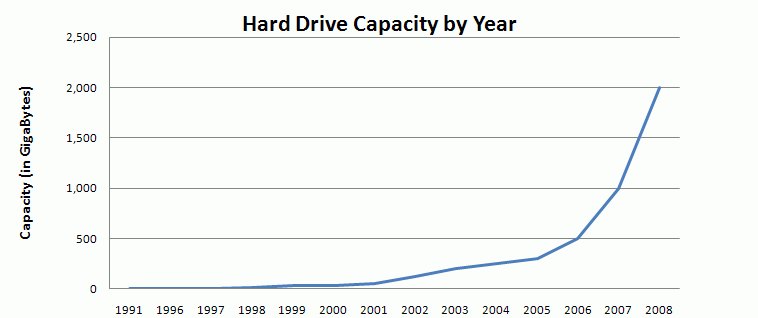

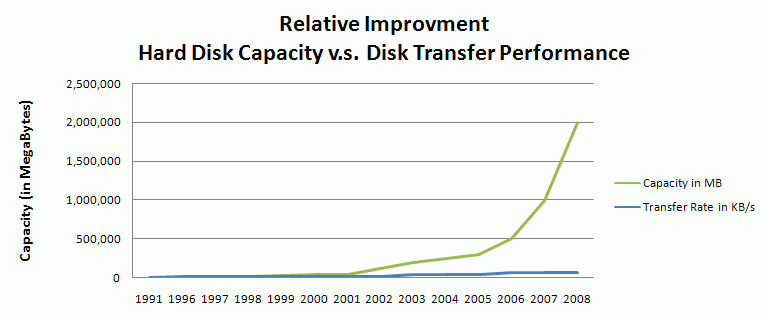

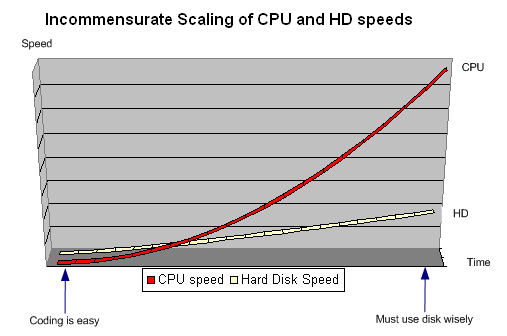

Today's file systems were designed ~20 years ago, so this incommensurate scaling has led to many inefficiencies in performance. A successful file system designed today will take projected performance trends of the upcoming decades into consideration.

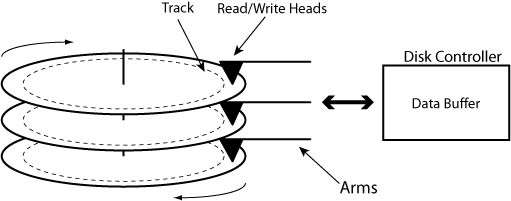

The disks spin at a fixed spindle speed, and data is read by magnetic read/write heads as it passes under them. The arms of the reading system are all connected to a single actuator structure. For this reason, it is easiest to read data in a cylindrical shape with equidistant tracks on each spinning disk (platter).

In order to read data on another cylinder, you need to tell the Disk Controller to:

1) Accelerate arms in right direction.

2) Decelerate arms about half way to target track.

3) Settle into the proper track.

4) Wait for the sector with the data to rotate and pass under the read head.

After the previous steps, the data can be read and transferred to a buffer in the disk controller where you can retrieve it using DMA or programmed IO as we discussed in previous lectures. In order to figure out how long the reading operation will take, we need to get some information from the disk manufacturer.

| Specification |

Seagate

Barracuda LP2 HDD tech specs |

Corsair

Force GT SSD tech specs |

| External

Transfer Rate: the rate at which can be moved from disk-buffer to bus |

300 Mb/s |

3 Gb/s |

| Sustained

Data Read: the rate at which data can be physically read. For mechanical hard disks this is the rate at which data passes under the read head. |

95 Mb/s |

555 Mb/s |

| Sustained Data Write: | - |

495 Mb/s |

| 4KB

Random Write: refers to the rate of I/O operations when writing page-sized chunks of data to random locations. |

- |

80,000 IOPS |

| Cache: the size of the buffer in the disk controller. |

32 MB |

- |

| Average

Latency: for hard disks, this it the time it takes on average to locate a sector within the current "cylinder". Effectively, this is the about time it takes for the disk to rotate 180 degrees. |

5.5 ms |

- |

| Average

Seek Time: the average amount of time it takes to find another "cylinder". |

10 ms |

- |

| Spindle

Speed: physical speed in RPMs of a hard disk. |

5900 RPM

(98.33 Hz) |

n/a |

| Contact

Starts/Stops: average number of times that the disk can be powered on and off before failure. |

50,000 |

n/a |

| Non-recoverable

Read Error Rate: average rate at which errors occur that are not caught by error-correction features. (10-14 errors/bit is impressive!) |

10-14

errors/bit |

- |

| Annualized

Failure Rate (AFR): the estimated probability that the device will fail within the first year of use. |

0.32% |

0.44% |

| Mean

Time Before Failure (MTBF) the estimated time before the device fails. Note: 2,000,000 hours is over 200 years! Obviously the devices were not tested for this long, so this number is a very rough estimate. |

2,700,000 hours |

2,000,000 hours |

| Power

Consumption (Operating): the average power consumption during read/write operations. |

6.8 W |

2.5 W |

| Power

Consumption (Idle): the average power consumption when the device is not doing anything useful. |

5.5 W |

0.6 W |

| Operating

Shock: force required to break device while operating |

70 G |

1500 G |

| Non-Operating

Shock: force required to break device when off. SSDs do not have moving parts so this number is the same as operating shock. |

300 G |

1500 G |

| Acoustic

Energy

(Operating): noise generated by device during operation. Since SSDs do not have moving components, the acoustic energy from them is negligible. |

2.6 bels |

n/a |

| Acoustic Energy (Idle): | 2.5 bels |

n/a |

| Storage Capacity: | 2 TB |

60 GB |

| Price: | $150 |

$100 |

Speculation - trying to predict what the application will do, and preemptively start doing those actions.

1) Reading strategies:

Prefetching - guessing where the application will read from in the future, and reading these sectors while disk is idol and the CPU is busy.

Batching - the idea here is that the file system will read larger blocks of memory than what the user asked for. This will greatly improve our performance, however when the read blocks are too large, that can cause problems. Batching is a special case of prefetching.

2) Writing

strategies:

Dallying - after receiving a write instruction, the file system waits for a period of time in case additional write requests are made. In this way, This saves the file system from making unnecessary writes, and the physical motion of the hard disk is minimized.

Batching for write where you keep a buffer with the data that the application asked to store, and you wait in case other requests for writing are present.

note: you can use the Dallying approach for reading data as well. For example, when the reading heads are at the outer cylinder of the disk and the application asks for data on a cylinder that is far away, the operating system can dally a little bit and wait to see if there are more data to be read from the current cylinder before it asks to move the reading head.3) Locality of Reference - taking advantage of the fact that file access follows patterns in practice.

ex. Spatial Locality - taking advantage of the tendency of file accesses to appear near each other physically on the disk.

ex. Temporal Locality - taking advantage of the tendency of files that were modified or accessed near each other in time to be near each other physically.

Question: can you think of other localities that can help you while designing a file system?

1) Latency - delay between request and response.

2) Throughput - the number of requests per second that the file system can handle.

* Note: throughput and latency are often competing goals!

3) Utilization - % of resource devoted to performing useful work.

- 1ns cycle time (1 instruction/cycle).

- 1 Programmed IO (PIO) takes 1000 cycles (1 μs).

- 5 PIOs to send command to IO device (5 μs).

- disk latency of 50 μs.

- data processing takes 125 cycles/Byte (0.125 μs/B).

- 1 PIO/Byte to read data.

|

to send command: 5μs

disk latency: 50μs

inb PIO: 1μs

processing: 0.125μs

Therefore latency = 5μs + 50μs + 1μs + 0.125 μs = 56.125μs

Throughput = 1/56.125 = 17,817 requests/s

Utilization of CPU= 0.125/56.125 = 0.2%

|

to send command: 5μs (one

time)

disk latency: 50μs (one time)

inb PIO: 1μs (per byte)

processing: 0.125μs (per byte)

Therefore latency = 5 μs + 50 μs + 40 μs + 5 μs = 100

μs for the 40 Bytes buffer.

for each byte, we need:

100 μs / 40 Bytes = 2.5 μs

Throughput = (1 Byte) / (2.5 μs) = 400

(K Bytes) / s

Utilization = (0.125 μs * 40) / (5 μs + 50 μs + 40 μs + 5 μs) = 5%

|

Latency = (5 μs +50 μs + 40 μs) / 40 B = 2.375 μs for each Byte.

Since computation and disk latency occur in parallel, computation time vanishes. This is not much of an improvement because the computation time (5 μs) is tiny relative to the total latency (95 μs). This improvement would be much more significant if computation and IO took a similar amount of time. Therefore using a larger buffer would be more efficient.

If buffer size = 400 bytes, both IO and computation will start and

finish at roughly the same time.

The latency time for each byte will be 455 μs / 400 μs = 1.1375 μs.

The lease we can make that latency is 1 μs. The bottle neck in this case is the time for programmed IO to get the data off the device.

|

|

By using yield, we are involving the scheduler and allowing it

to

make a decision about which process should have control of the CPU. In

this way, we are maximizing the total utility of the CPU while our

application is not doing anything useful.