CS 111

Lecture 16 (5/29/12)

Abimael Arevalo

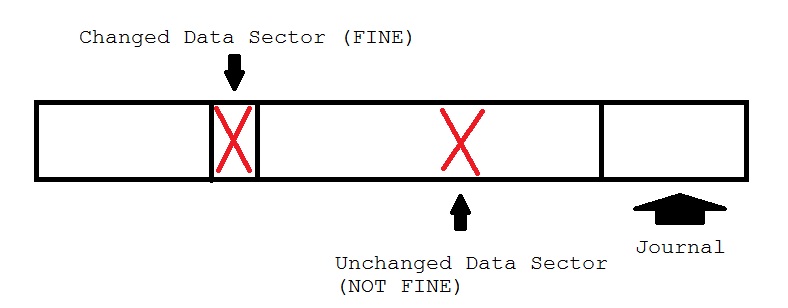

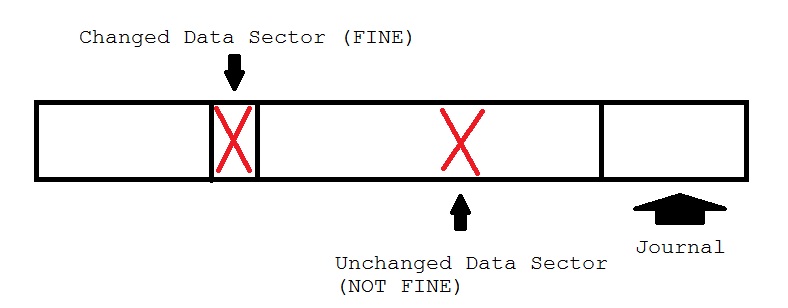

Media Faults

Disk-fails: solvable via logging

If an unchanged sector fails, the data cannot be reconstructed using the journal.

RAID (Redundant Array of Independent Disks)

-

Simulate a large drive with a lot of little drives.

-

Save money and gain reliability.

RAID levels a la Berkeley

-

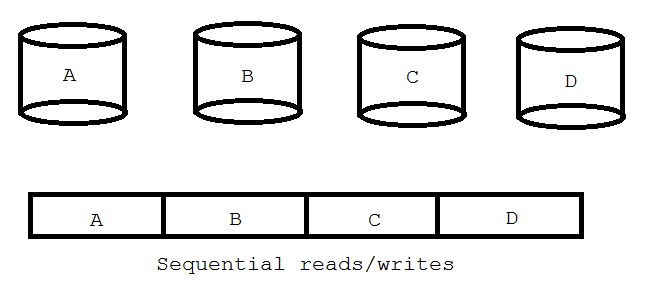

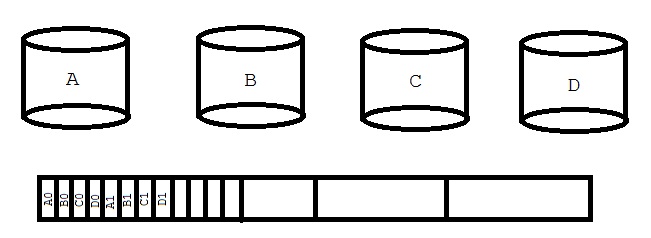

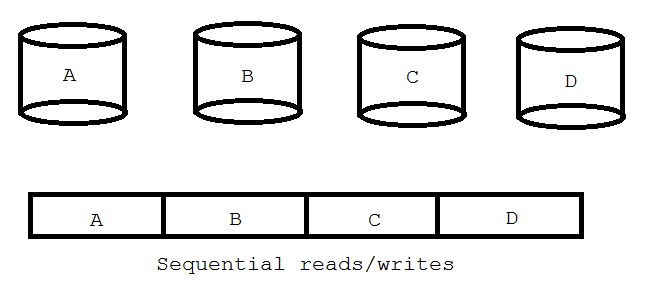

RAID 0: no redundancy, just a simulated big disk.

-

Concatenation: performance of the virtual disk is roughly that of a physical disk.

-

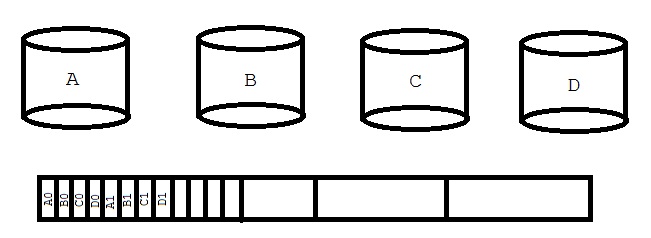

Striping: Divide A, B, C, and D into pieces and places the pieces into the drives. Each drive can be run in parallel to extract the data. Virtual disk performance is roughly four times faster than a physical disk.

-

Growing is easier in concatenation than in striping.

-

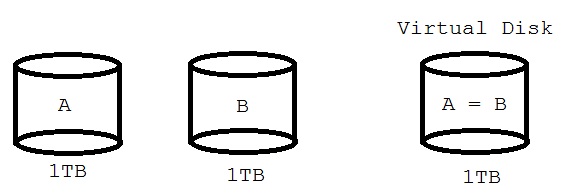

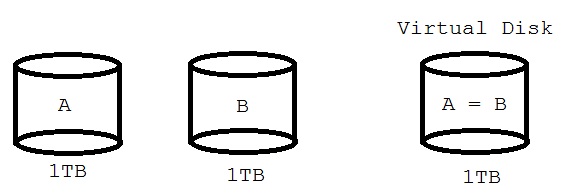

RAID 1: Mirroring

-

Write to both drives.

-

Read from either (can pick the closest disk head).

ASSUMPTION: reads can detect faults.

-

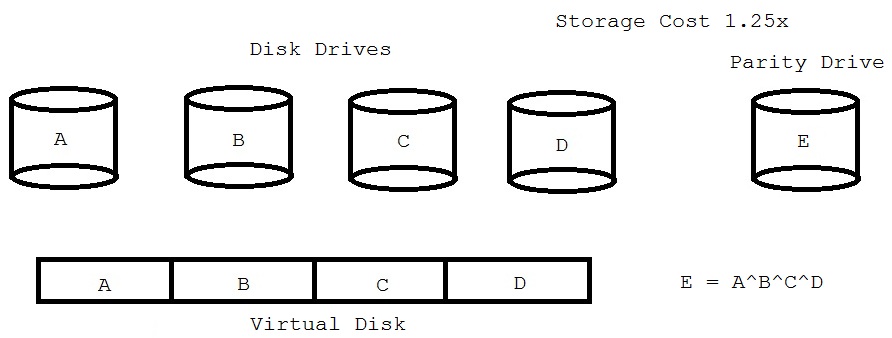

RAID 2,3,4,5,6,7,...

-

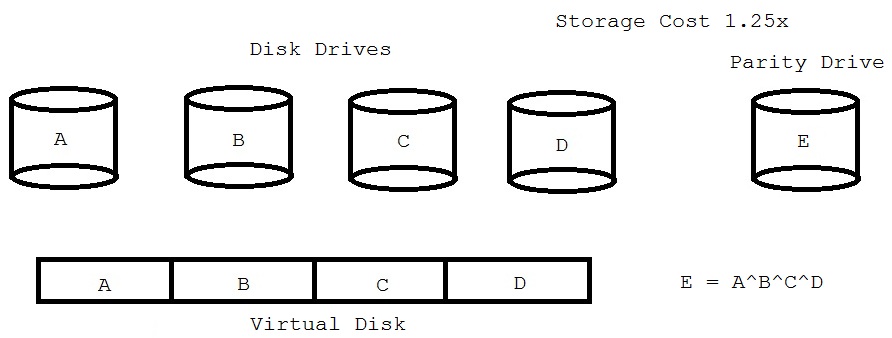

RAID 4

-

Reads are like RAID 0 concatenation and has worse read performance than RAID 0 striping.

-

Writes are like RAID 1 (need to read drive E before writing).

-

If C fails: C = A^B^E^D ('^' = XOR)

Disk Drive Reliability

-

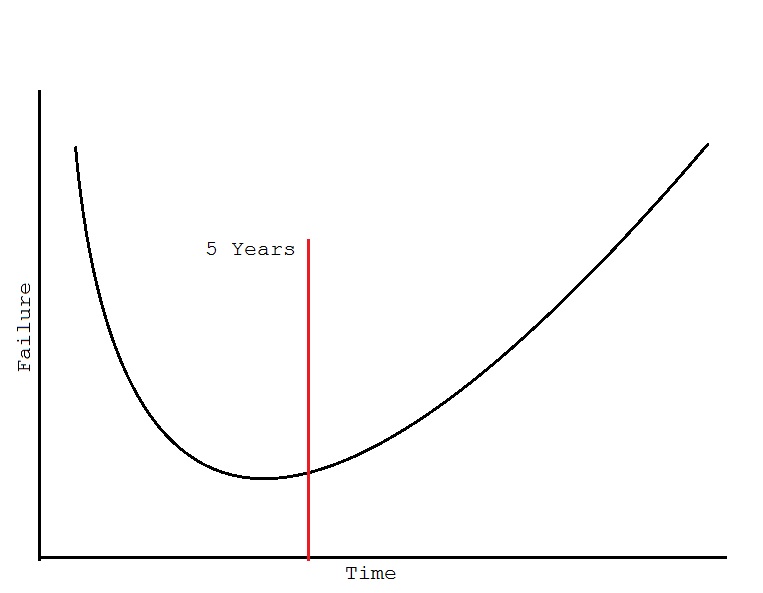

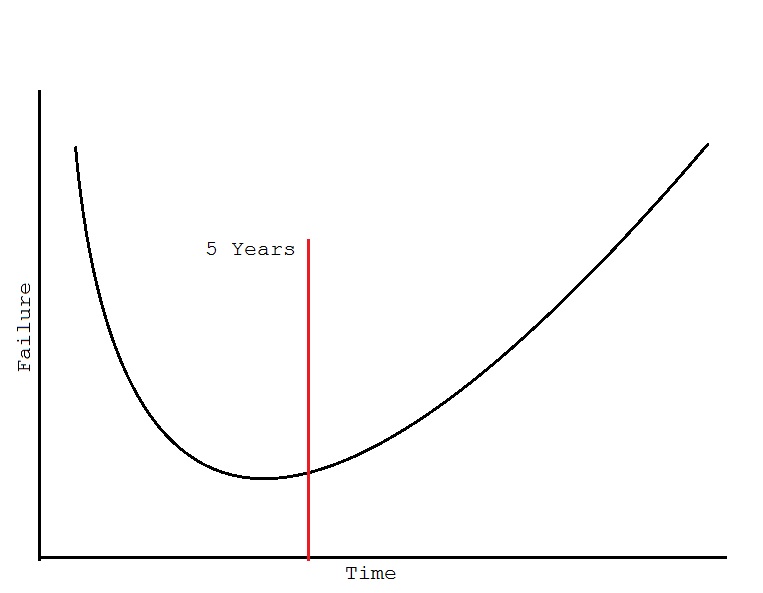

Mean time to failure is (typically) 300,000 hours (34 years), but in reality, drives get replaced every 5 years.

-

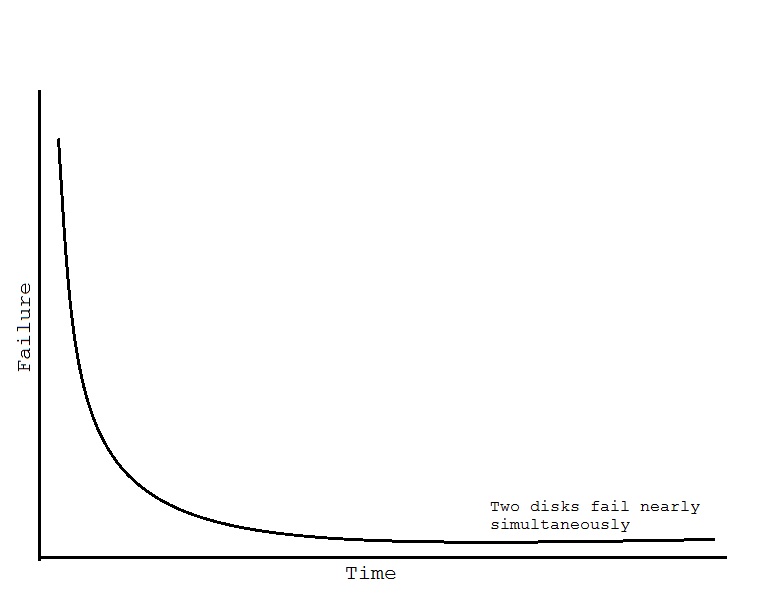

Probability distribution function for single disk failure.

-

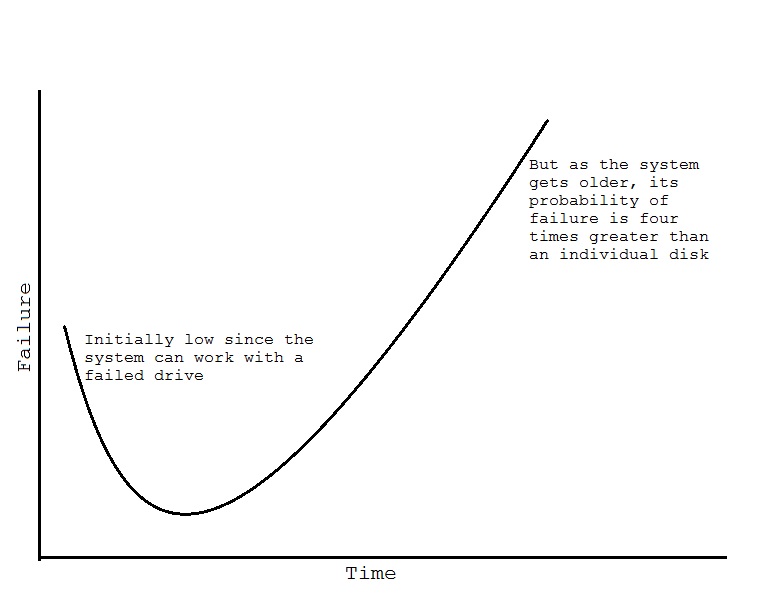

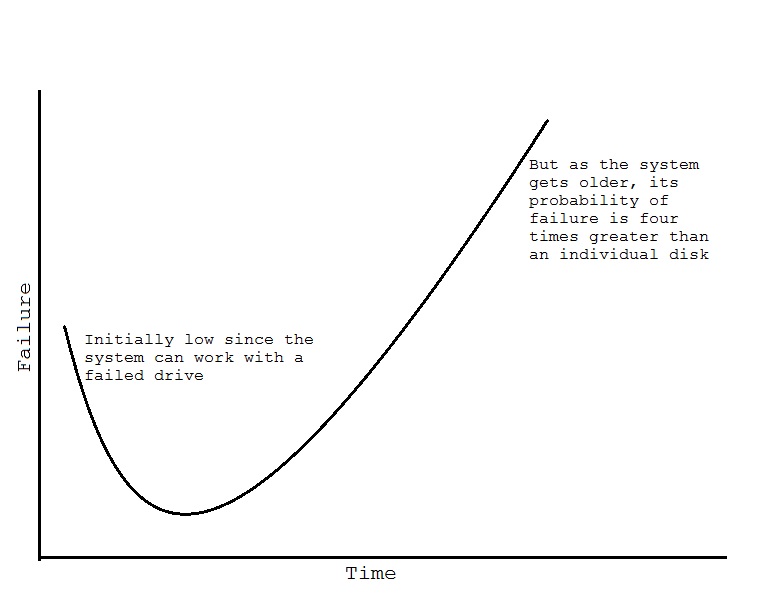

Probability distribution function for RAID 4 (never replace drives).

-

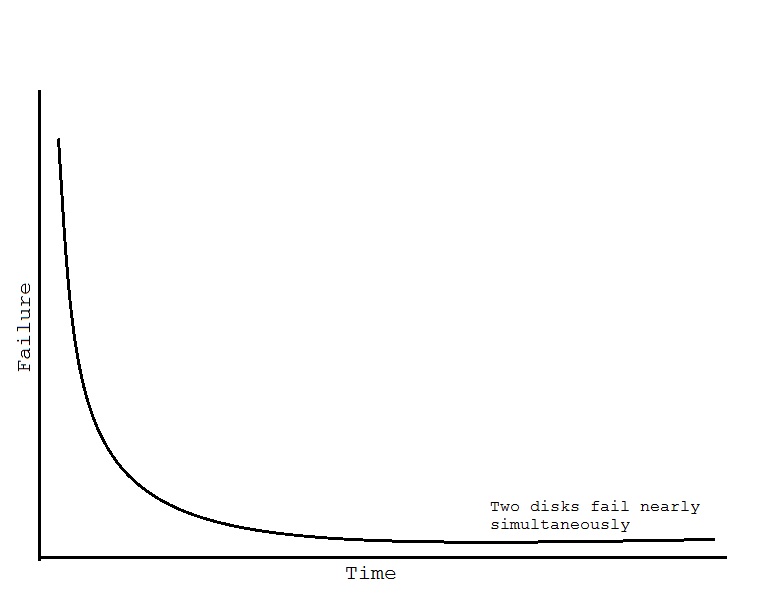

Probability distribution function for RAID 4 (assuming failed disks are replaced).

-

Disk fails.

-

(60 minutes later) operator replaces it.

-

(8 hours later) rebuilding phase. Depends on drive size.

-

RAID schemes can be nested.

-

Q: Does RAID make backups obsolete?

A: No, we still need backups for user errors.

Distributed Systems

RPC (Remote Procedure Calls) vs. System Calls and Function Calls

-

Caller sees:

x = fft (buf, n);

Implementation:

send (buf, n); // to server

// Wait for response

return;

-

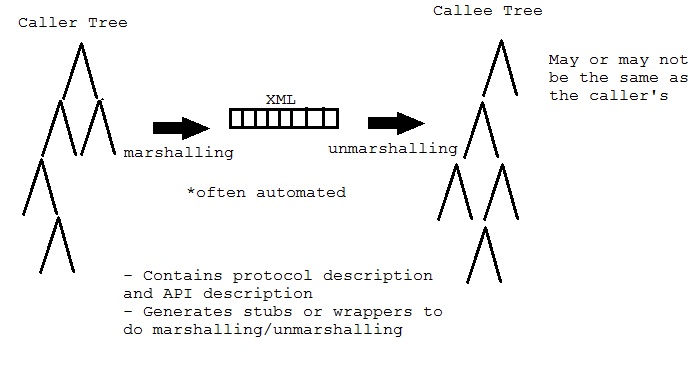

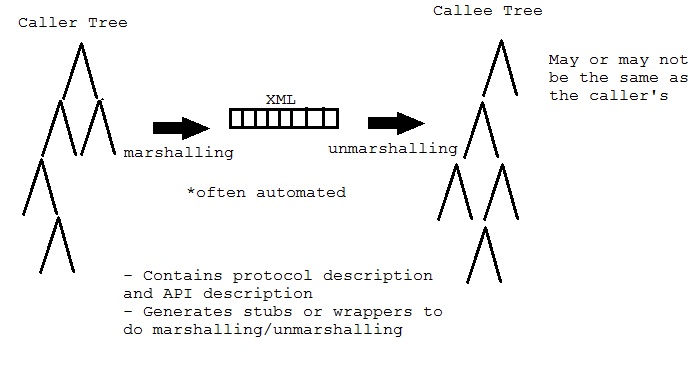

Caller and callee do not share address space. There is no call by reference (at least, not efficiently).

-

Caller and callee may be different architectures (ARM vs. SPARC or little vs. big endian).

Requires conversion:

RPC has different failure modes

-

PRO: Callee cannot trash caller's memory and vice versa (hard modularity).

-

CON: Messages get lost.

-

CON: Messages get corrupted.

-

CON: Messages get duplicated.

-

CON: The network can go down or be slow.

-

CON: The server can go down or be slow.

What should a stub/wrapper do:

-

If corruption - resend

-

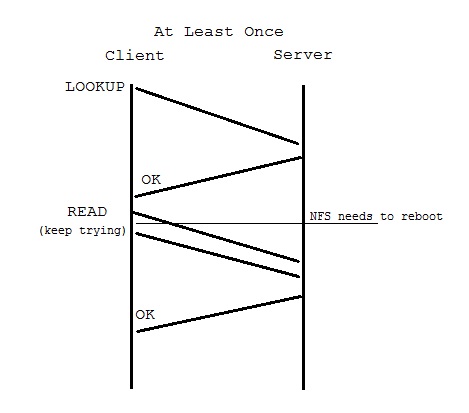

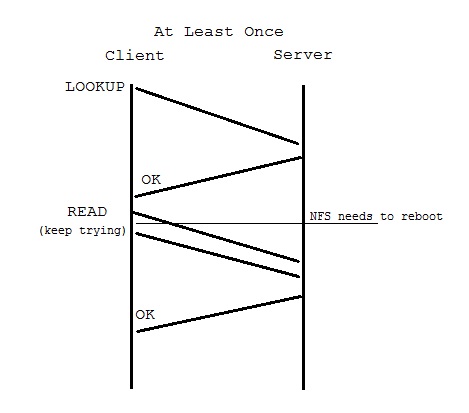

If no response - possibilities are:

-

Keep trying - at least once RPC (suitable for idempotent operations).

-

Give up, return error - at most once RPC (suitable for transactional operations).

-

Exactly once RPC (Holy Grail of RPC).

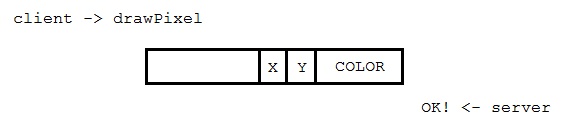

RPC examples:

-

HTTP client -> "GET /foo/bar.html HTTP\r\n"

Server reponse -> "HTTP /1.1 200 OK\r\n"

-

SOAP (Simple Access Object Protocol)

-

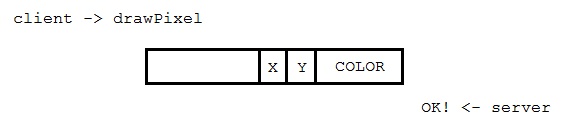

X - Remote screen display

-

Works even if they are in the same machine.

-

Use of higher level primitives (e.g. fillRectangle).

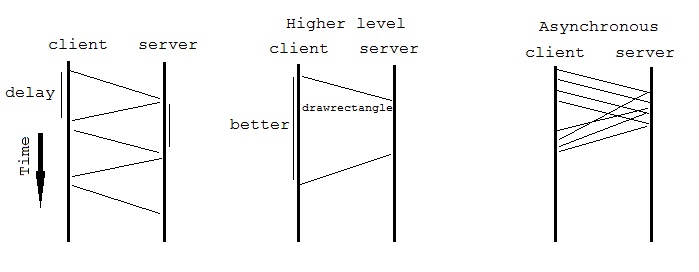

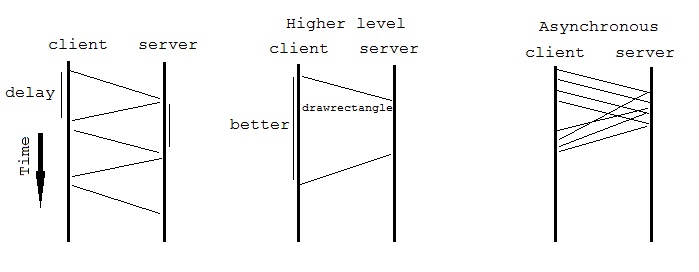

Perfomance Issues with RPC

Solutions:

-

Have higher level primitives.

-

Asynchronous RPC - better performance but can complicate caller

-

Cache in caller (for simple stuff)

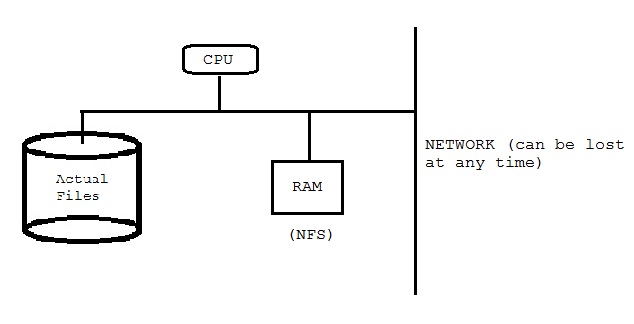

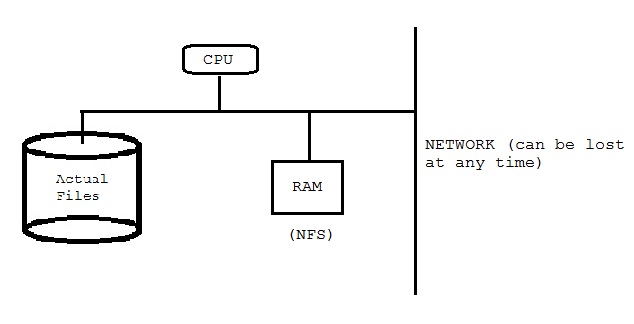

NFS (Network File System): File system built atop HTTP

The NFS protocol is like the UNIX file system but on wheels.

-

LOOKUP (dirfh, name) // Request fh and attributes (size, owner, etc.)

fh = file handle, a unique id for a file within a file system

-

CREATE (dirfh, name, attr) // Returns file handle and attributes

-

REMOVE (dirfh, name) // Returns status

-

READ (fh, size, offset) // Returns data

-

WRITE (fh, size, offset, data) // Returns status

We want our NFS to be reliable even if the file server reboots.

"Stateless Server

-

Whenever the client does a write, it has to wait for a response before continuing.

-

NFS server cant' respond to a write request until data hits disk.

-

NFS will be slow for writes because it forces writes to be synchronous.

-

To fix this problem we "cheat":

-

Use flash on the server to store pending write requests.

-

Writes don't really wait for the server to respond, if a write fails, a later "close" will fail.

Can use "fsync" (written all data) and "fdatasync" (written all data to disk) to make sure data is written. But these operations slow performance.

-

In general, most clients won't see a consistent state.

-

NFS by design doesn't have read/write consistency (for performance reasons).

-

It does have open/close consistency through fsync and fdatasync.

|