CS 111 Scribe Notes

Lecture 3: Modularity and Virtualization

Presented: Thursday, September 30, 2010

Written by: Tran Dam, Amir Vajid, Richard Yu, Eric Zheng

Contents

1 From Last Lecture

1.1 Exercise: Print to Screen

2 How Can We Improve Our Code from Last Lecture?

2.1 Problem: Code Duplication

2.2 Errors Are Not Reported

2.3 Improve Performance through Efficient Usage of the Bus

2.4 Double Buffering

3 Modularity

3.1 Motivation

3.2 Modularity Metrics

3.2.1 A Note About Robustness

4 API: Application Programming Interface

4.1 Complications

4.2 Properties

4.2.1 Virtualization

4.2.2 Abstraction

5 Supporting and Enforcing Virtualization

5.1 Function Call Modularity

5.2 Soft Modularity

1 From Last Lecture

1.1 Exercise: Print to Screen

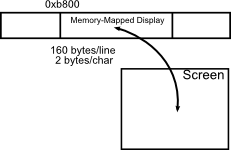

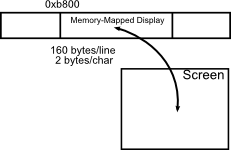

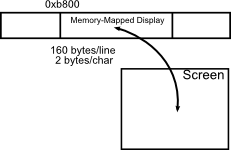

Last lecture, we were left with the exercise to write to console, a

memory-mapped device located at 0xb8000. In this example, we have a 25x80

character screen in which every adjacent pair of bytes represents one

character, giving 160 bytes per line.

We will use the uint16_t data structure, a data type of unisigned 16-bit

integers, to represent our 2-byte characters. The benefit of using this data

type is that it does not vary depending on the machine. A uint16_t data

type is 16 bits on a 32-bit machine and a 64-bit machine, while a short may

vary.

First, we will create a pointer to the display memory. The pointer is offset so

that, when we store text, it will print roughly in the middle of the screen.

In this exercise, we are printing numbers to the screen. As shown in the code

sample below, we take the bottom order digit of the integer and add the

character `0' as an offset to ensure that it is in the range of the ASCII

characters `0' to `9'. The character is stored and the pointer shifts to the

left, since we are printing from right to left (least significant to most

significant digit) on the screen. The loop continues while we have a non-zero

digit that needs to be printed to the screen. Because we are manually printing

at a low level, our implementation is much faster than calling the

printf() function.

We will use the uint16_t data structure, a data type of unisigned 16-bit

integers, to represent our 2-byte characters. The benefit of using this data

type is that it does not vary depending on the machine. A uint16_t data

type is 16 bits on a 32-bit machine and a 64-bit machine, while a short may

vary.

First, we will create a pointer to the display memory. The pointer is offset so

that, when we store text, it will print roughly in the middle of the screen.

In this exercise, we are printing numbers to the screen. As shown in the code

sample below, we take the bottom order digit of the integer and add the

character `0' as an offset to ensure that it is in the range of the ASCII

characters `0' to `9'. The character is stored and the pointer shifts to the

left, since we are printing from right to left (least significant to most

significant digit) on the screen. The loop continues while we have a non-zero

digit that needs to be printed to the screen. Because we are manually printing

at a low level, our implementation is much faster than calling the

printf() function.

1 void write_integer_to_console (int n)

2 {

3 uint16_t* p = (uint16_t*) 0xb8014;

4 do {

5 *p-- = '0' + n % 10;

6 n /= 10;

7 } while(n);

8 }

2 How Can We Improve Our Code from Last Lecture?

Our application from the last class is now complete. To run it, we just power

on the computer, and the word count is quickly computed and output. It is much

faster than running a full operating system like Windows or Linux, and it is

more secure, since we wrote all of the code ourselves. But it is still not

quite ready.

2.1 Problem: Code Duplication

We have multiple copies of read_ide_sector(). There are three copies of

this function: in the MBR, the VBR, and in our application. It would be nice if

we could just have one copy. For example, we could have the convention that

read_ide_sector() is located exactly 100 bytes from the start of the

MBR. Recall that the master boot record is always read into the same location

in memory (the BIOS always puts it in the same place). The VBR and our

application can then just call this copy in the MBR.

We could do even better by just putting our routine in the BIOS so that

everyone knows it is there. In fact, to some extent, this was the original

design plan for the BIOS. The BIOS was intended to contain subroutines,

interrupt service routines, drivers, etc. that were meant to be shared all by

operating systems and by all applications.

The downside to this approach is that it is brittle. A change to what

read_ide_sector() does could be incompatible with any of the programs that

use it. There are also modularity issues in that there are extra dependencies

between the BIOS and your application that were not there before. This was a

more popular when RAM was more scarce and multiple copies of code could not be

stored all at once.

2.2 Errors Are Not Reported

When communicating with another device, there is always the possibility that

something could go wrong. For example, the disk could never become ready or

there could be read errors, but neither of these are reported in our program.

We need to change the API for read_ide_sector() and have it return a

value that indicates whether or not an error was discovered. When a read error

occurs, the disk controller returns a failure bit that we can check. To check

for the disk never becoming ready, a counter can be used and report the failure

after some large number of attempts.

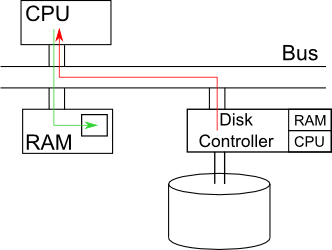

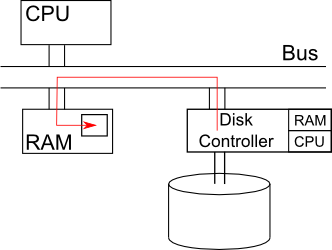

2.3 Improve Performance through Efficient Usage of the Bus

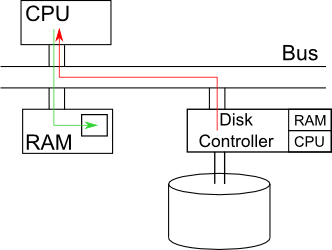

We are currently using the insl() instruction to read from the disk. As

seen in the picture below, there is a bus in the paranoid professor's computer

that connects our three main devices together: the CPU, the RAM and the disk

controller. The disk controller has its own RAM (mostly used to cache data) and

its own CPU. When you issue the insl() instruction, the data leaves the

disk controller and goes to the CPU. The insl() instruction then realizes

the data should be sent to RAM so it sends it there next. The problem here is

that it takes up twice as much bus bandwidth as we would like, since it uses

the bus twice.

Side Note: Cosmic rays are single

photons from outer space and the

biggest one can contain as much

energy as a thrown baseball (in

a much smaller space). These

cosmic rays could create errors

in places like your memory that

cannot be checked. On average,

there is probably a memory bit

flip in your laptop about once

every eight hours. |

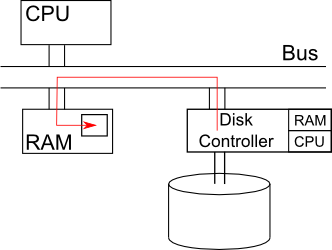

To improve performance, we should use a different approach: Direct Memory

Access (DMA). In this approach, the CPU issues a command to the disk controller

telling it to copy the data out of its cache directly into some location in

RAM. The bus is the main delay bottle neck in our program, this will improve

performance by a factor of 2. It is almost universally used today to access

disks or other high bandwidth devices.

To improve performance, we should use a different approach: Direct Memory

Access (DMA). In this approach, the CPU issues a command to the disk controller

telling it to copy the data out of its cache directly into some location in

RAM. The bus is the main delay bottle neck in our program, this will improve

performance by a factor of 2. It is almost universally used today to access

disks or other high bandwidth devices.

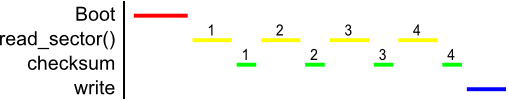

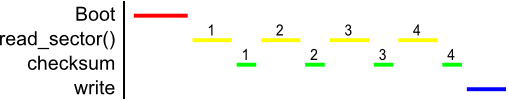

Consider another application that is more CPU-intensive such as computing an

SHA checksum of 512 bits. We will assume that the time it takes to compute the

checksum is 7.5 ms per 512 byte buffer, and the time it takes to run

read_ide_sector() is 10 ms. Below is a timing diagram of an execution of the

application. After the boot, the program continuously reads data into the

buffer and immediately computes the checksum for this data. This continues

until all of the data has been processed, and a write to console is issued.

Consider another application that is more CPU-intensive such as computing an

SHA checksum of 512 bits. We will assume that the time it takes to compute the

checksum is 7.5 ms per 512 byte buffer, and the time it takes to run

read_ide_sector() is 10 ms. Below is a timing diagram of an execution of the

application. After the boot, the program continuously reads data into the

buffer and immediately computes the checksum for this data. This continues

until all of the data has been processed, and a write to console is issued.

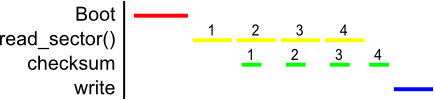

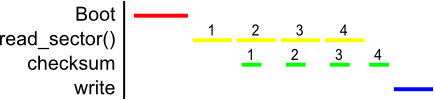

2.4 Double Buffering

To speed this up, we can utilize double buffering. With two buffers, we

can compute the checksum on the previously read buffer while we read more data

into another buffer. This allows us to compute a checksum while we wait for the

disk to give us more data. Note that we are not actually running these

processes in parallel on the processor, since there is just one CPU. When we

read, all we are doing is sending a command to the disk controller to get more

data, which barely uses the main CPU. After issuing the command to the disk

controller, we can start computing the checksum. Thus, because reading the disk

barely uses any CPU resources, the tasks can be run simultaneously. Once the

checksum is computed, we can ping the disk controller asking if its task is

completed. This way we do not waste CPU resources by pinging until after we

have finished our computations.

There are a few details in this process that are worth addressing. First, note

that the checksum is delayed by one cycle, since data must first be

read before a checksum can be computed. Also note that in our example, reading

the disk took a little longer than computing the checksum, so reading was our

bottleneck: the processor could not compute the next checksum until the data

was read into the buffer. However, if computing the checksum took longer, it

would be the bottlenecking process, and the disk controller would be idle for

some time waiting for the next location that needs to be read. Lastly, it is

important to note that double buffering would not be useful if one task took

significantly longer than the other. For example, if computing the checksum

only took 0.001 ms, we might as well just compute the checksum in between

reads, since it doesn't add much delay when done serially.

There are a few details in this process that are worth addressing. First, note

that the checksum is delayed by one cycle, since data must first be

read before a checksum can be computed. Also note that in our example, reading

the disk took a little longer than computing the checksum, so reading was our

bottleneck: the processor could not compute the next checksum until the data

was read into the buffer. However, if computing the checksum took longer, it

would be the bottlenecking process, and the disk controller would be idle for

some time waiting for the next location that needs to be read. Lastly, it is

important to note that double buffering would not be useful if one task took

significantly longer than the other. For example, if computing the checksum

only took 0.001 ms, we might as well just compute the checksum in between

reads, since it doesn't add much delay when done serially.

3 Modularity

3.1 Motivation

Although we can now efficiently transfer data and quickly run programs, there

still exist some problems to our design:

- It is too hard to change: modifications must be applied to each

application independently.

- We cannot run several programs simultaneously.

- We cannot reuse parts of other programs.

- It is hard to recover from faults.

As a starting point, we will concern ourselves with problems 1 and 3.

Adding modularity can be good or bad. On the one hand, it hurts performance

because there are extra function calls, but on the other hand it makes

programming and documentation much simpler. Overall, the main goals of

modularity are to maintain the system's performance, simplicity,

robustness, flexibility, and portability.

3.2 Modularity Metrics

| Performance | Programs should run quickly and be memory efficient. |

| Simplicity | Programs should be easy to learn, use, and document. |

| Robustness | Programs should be able to work in a harsh operating environment. |

| Flexibility/Neurtrality | Programs should not assume past what they need to

run, e.g., pipe (A | B) does not assume anything about the types of data. |

| Portability | Code should be easy to reuse between programs.

|

3.2.1 A Note About Robustness

There are two major philosophies for how robustness should be approached. The

MIT philosophy is that programs should be very robust from the get-go. This is

a philosophy heavily echoed by those in academia. However, the Berkeley method

is to make the program work and fix it later. This is the approach most

preferred by industries, which ship out products and update them later, because

it allows the program to ship earlier.

4 API: Application Programming Interface

This is what we call the interface between modules. Let us begin with an

example of a bad API:

void read_ide_sector(int s, char buf[512]);

which is what we developed last time. A better API would look something like

this (POSIX):

ssize_t read(int fd, void *buf, size_t count);

This supports the use of multiple devices, thanks to the file

abstraction, and fd, the file descriptor. For example, we can deal with the

familiar construct called files.

The file abstraction increases complexity, but we will have more

flexibility; specifically, we can use the file abstraction to deal with

anything that behaves like a file, like sockets and pipes.

Using a void *buf means that we can use any data type for our read

output. The function returns a success indicator of type ssize_t, a

signed size type, which communicates back to the function caller, e.g.,

negative values indicate an error, signalling the caller to see errno for

more details.

There is always the problem of architecture decisions like ssize_t and

size_t, but in general, this works great.

4.1 Complications

We do have one bug: SSIZE_MAX < SIZE_MAX. This means that we can

potentially return an error code when we have a successful read (remember

overflow?). Our elegant, respectable solution: Please don't do it.

The read() function is still a bit inflexible, though: How should we do

random access reads? We can use a different function to set our position in the

file:

off_t lseek(int fd, off_t offset, int whence);

It is similar to read(), but with an offset from a position dictated by

the flag. 0: start; 1: now; 2: end.

We provide an alternative function that behaves more like read() as well:

ssize_t pread(int fd, void *buf, size_t count, off_t offset);

This combines the features of read() and lseek() to allow us to

perform random access reads with relative ease.

4.2 Properties

We note that read() has two properties: Virtualization and

Abstraction.

4.2.1 Virtualization

read() pretends to control a machine that doesn't actually exist (the

file abstraction) implemented using low-level constructs (insl, etc.).

4.2.2 Abstraction

The virtualized machine is simpler and easier to understand compared to the

real machine.

5 Supporting and Enforcing Virtualization

We want modularity in our systems. Virtualization is used to support

modularity.

Last time, we did not properly virtualize: we ran insl directly. But in a

sense, we did virtualize: we used function call modularity

(read_ide_sector()).

5.1 Function Call Modularity

We started at a kind of level 0: we had a bunch of global variables and

functions that looked into each other, running on top of bare metal with no

virtualization. Then we moved to a kind of level 0.1: we implemented a function

called read_ide_sector() that followed function call modularity.

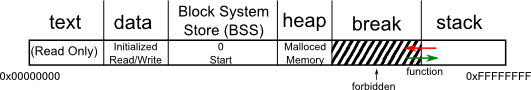

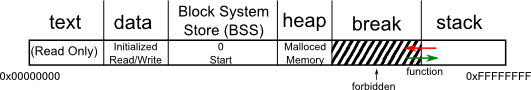

Let us consider the classic virtualization called RAM. We allocate different

sections of RAM to different purposes:

- text for instructions

- data for initialized global and static variables

- bss for uninitialized global and static variables

- heap for dynamic memory allocation

- stack for function activation records and variables

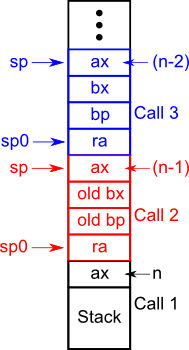

Functions grow on the stack. Consider the following recursive function to

compute a factorial:

Functions grow on the stack. Consider the following recursive function to

compute a factorial:

1 int fact(int n){

2 if(n == 0)

3 return 1;

4 else

5 return n * fact(n - 1);

6 }

If we compile this code with gcc with the do-not-optimize flag, we

produce the following machine instructions:

1 pushl %ebp ; *--sp = bp;

2 movl $1, %eax ; ax = 1;

3 movl %esp, %ebp ; bp – sp;

4 subl %8, %esp ; sp -= 2;

5 movl %ebx, -4(ebp) ; bp[-1] = bx;

6 movl 8(%ebp), %ebx ; bx = bp[2];

7 testl %ebx %ebx ; if(bx)

8 jne L5 ; goto L5;

9 L1: movl -4(%ebp), %ebx ; bx = bp[1];

10 movl %ebp, %esp ; sp = bp;

11 popl %ebp ; bp = *sp++;

12 ret ; goto *sp++;

13 L5: leal -1(%ebx), %eax ; ax = bx-1;

14 movl %eax, (%esp) ; *sp = ax;

15 call fact ; *--sp = next instruction; goto fact;

16 imull %ebx, %eax ; ax = ax*bx;

17 jmp L1 ; goto L1;

We perform several actions on the registers and the stack:

- Register bx contains n

- Register ax contains the return value (by convention).

- Register sp contains the stack pointer, which points to the

top of the stack.

- Register bp contains the frame/base pointer, which points

to the stack frame.

Consider calling fact(n):

We have a contract between the caller and callee functions:

- Return address is stored in *sp

- First parameter is stored in *(sp+1)

- Call to function

5.2 Soft Modularity

What if the caller screws up? For example, putting garbage into the return

address or setting the stack pointer to 0 before the call can ruin program

flow.

What if the callee screws up? For example, looping infinitely and never

returning control to the caller.

This virtualization has these flaws, making it a type of soft modularity.

We can only function if the caller and callee place complete trust in one

another and maintain the same standards. What do we do about adversaries that

want to bring down the system?

What we want is hard modularity.

File translated from

TEX

by

TTH,

version 3.89.

On 2 Oct 2010, 12:15.