to test performance of policies use refereence strings

ex. 0 1 2 3 0 1 4 0 1 2 3 4 <= string of virtual page #'s

FIFO:

Assume 3 physical pages (Δ = page fault)

| 0 | 1 | 2 | 3 | 0 | 1 | 4 | 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | Δ0 | 0 | 0 | Δ3 | 3 | 3 | Δ4 | 4 | 4 | 4 | 4 | 4 |

| B | Δ1 | 1 | 1 | Δ0 | 0 | 0 | 0 | 0 | Δ2 | 2 | 2 | |

| C | Δ2 | 2 | 2 | Δ1 | 1 | 1 | 1 | 1 | Δ3 | 3 |

cost = 9 faults

4 physical pages

0 1 2 3 0 1 4 0 1 2 3 4 A Δ0 0 0 0 0 0 Δ4 4 4 4 Δ3 3 B Δ1 1 1 1 1 1 Δ0 0 0 0 Δ4 C Δ2 2 2 Δ1 1 1 1 1 Δ3 3 D Δ3 3 3 3 3 3 Δ2 2 2

3 physical page => 9pg faults

4 physical page => 10pg faults

5 physical page => 5pg faultsthis is an example of Belody's Anomoly(adding cache can slow you down)

Optimal page replacement policy (Oracle subroutine)

Look into the future (ie, tail of reference string)

Look for page that isn't needed for longest time

0 1 2 3 0 1 4 0 1 2 3 4 A Δ0 0 0 0 0 0 0 0 0 Δ2 2 2 B Δ1 1 1 1 1 1 1 1 1 Δ3 3 C Δ2 Δ3 3 3 Δ4 4 4 4 4 4 cost = 7 faults

Oracle only available sometimes, for instance, a payroll program gets run nightly and generates a similar reference string every night. Because the reference string only has minor variations, we can use an oracle subroutine based on results of previous runs.

Least Recently Used (directly from temporal locality)

| 0 | 1 | 2 | 3 | 0 | 1 | 4 | 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | Δ0 | 0 | 0 | Δ3 | 3 | 3 | Δ4 | 4 | 4 | Δ2 | 2 | 2 |

| B | Δ1 | 1 | 1 | Δ0 | 0 | 0 | 0 | 0 | 0 | Δ3 | 3 | |

| C | Δ2 | 2 | 2 | Δ1 | 1 | 1 | 1 | 1 | 1 | Δ4 |

cost = 10 faults

Usually works better than FIFO, but this is a special case designed to show Belody's.How to implement LRU:

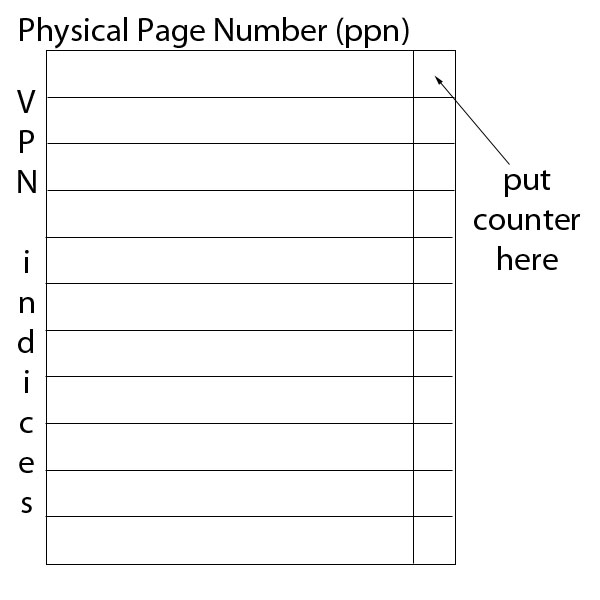

One level page table

In practice, counter is an estimate and might be kept in our own table only to be updated on a page fault. If it's really updated every time then we suffer huge performance hit. We could do it in hardware, but many don't want to add hardware support. When counter overflows, renormalize values.

Ideas for improving virtual memory performance

- better policy for replacement

- demand paging – don't load until program requests as opposed to read program into RAM, then execute

N pages in program

U pages used 0 ≤ U ≤ N

C avg cost of reading a page

F cost of faultingcost latency ordinary paging NC NC demand paging U(C+F)+UC+(U-1)F C - Dirty bit – suppose the victim has never been changed, then we don't need to write it out

- Every time a store instr. Issued, dirty bit is updated

- Hardware support (many processors already do it)

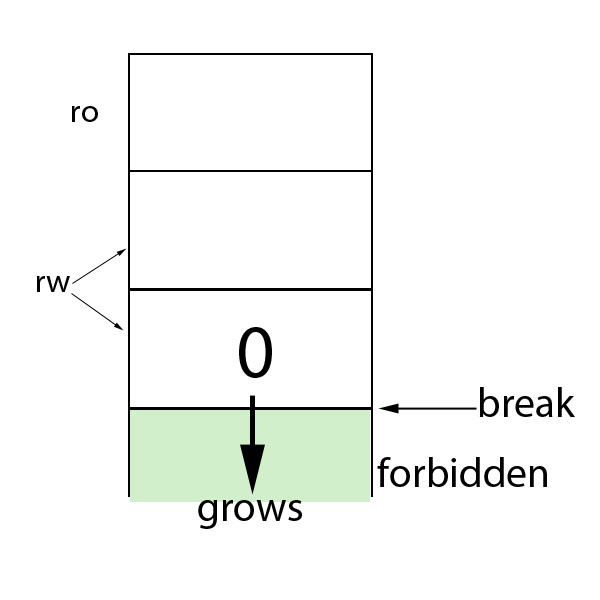

- Mark all pages read only at first

Update to rw on first pg fault & set dirty bit in software

2 classic VM tricks

- heap manager of Bourre Shell

signal handler for SIGSEV

it allocated memory

sbrk(4096) - add 4096 to the "break"

+ avoid sbrk overload

- disabled some valuable checking

- vfork

acts like fork except:

- child and parent share memory

- parent is frozen until child exits or executes

-

-non orthogonal controversal

-

child+parent get no access

pages cloned as needed

VM efficiency

FS efficiency

Disk scheduling algorithms

pending requests of accesses to blocks on disk

FIFO is simple, execute requests in the order received

Simple modelCost = seek time

At block I, seek to block j = |i-j|FCFS (first come first serve)

Scheduling policy

Avg cost (assuming random access to disk)Avg seek time is ¼ for h = .5

Avg seek time is ½ for h = 0Shortest Seek Time First (SSTF) - Always choose closest request

+ minimizes latency

+ maximizes throughput

- starvationElevator Algorithm (even better)

Uses SSTF but in a direction, until no more in that direction, then turns around

+ no starvation

- not quite as good latency/throughput (higher average wait time on ends)Circular Algorithm (better still)

Elevator with wrap around

Always scans in positive direction+ more fair

- worse throughput & latency than elevator>

Anticipatory scheduling – dallying - wait for something close & based on reference string for wait timeRobustness constraints for file system

- Eg update multiple blocks to implement 'rename' (Order is important!!)

- But all disk scheduling algorithms (except FCFS) reorder requests

- So we put dependencies on requests algorithms must obey (BSD File Systems)